- 与对预先存在的轨迹的完整数据集进行评估相比,更容易开始

- 从初始查询到成功或不成功的解决的端到端覆盖

- 能够检测应用多次迭代中的重复行为或上下文丢失

本指南将向您展示如何模拟多轮交互并使用开源

本指南将向您展示如何模拟多轮交互并使用开源 openevals 包评估它们,该包包含预构建的评估器和用于评估 AI 应用的其他方便资源。它还将使用 OpenAI 模型,尽管您也可以使用其他提供商。

设置

首先,确保已安装所需的依赖项:如果您使用

yarn 作为包管理器,您还需要手动安装 @langchain/core 作为 openevals 的对等依赖项。这对于 LangSmith 评估通常不是必需的。运行模拟

您需要开始使用两个主要组件:app:您的应用程序或包装它的函数。必须接受单个聊天消息(带有”role”和”content”键的字典)作为输入参数,并接受thread_id作为 kwarg。应接受其他 kwargs,因为将来的版本可能会添加更多。返回至少带有 role 和 content 键的聊天消息作为输出。user:模拟用户。在本指南中,我们将使用名为create_llm_simulated_user的导入预构建函数,该函数使用 LLM 生成用户响应,尽管您也可以创建自己的。

openevals 中的模拟器为每个轮次将来自 user 的单个聊天消息传递给您的 app。因此,如果需要,您应该基于 thread_id 在内部有状态地跟踪当前历史记录。

以下是模拟多轮客户支持交互的示例。本指南使用一个简单的聊天应用,该应用包装对 OpenAI 聊天完成 API 的单个调用,但是这是您调用应用程序或智能体的地方。在此示例中,我们模拟的用户扮演特别激进的客户的角色:

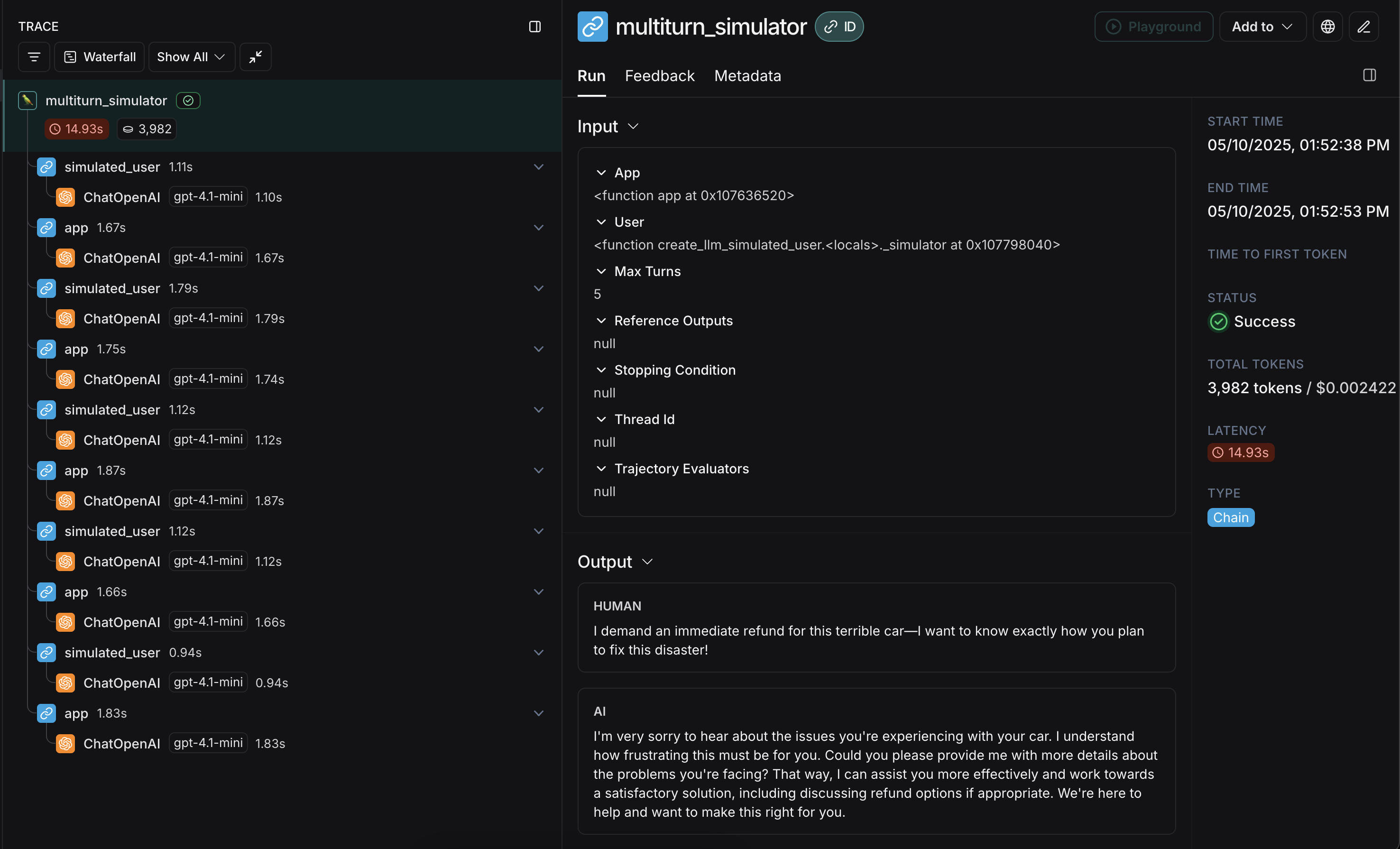

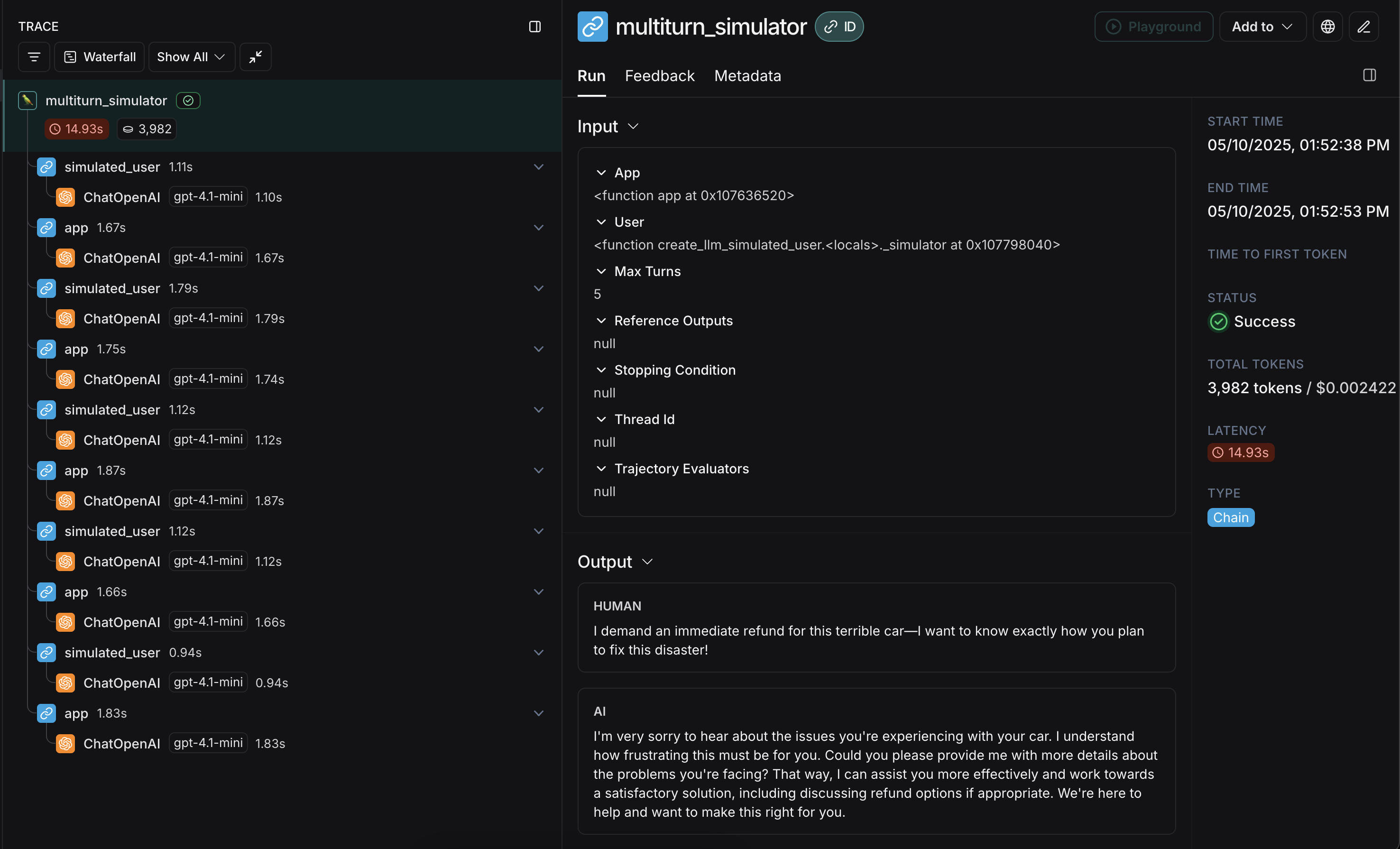

user 生成初始查询,然后在达到 max_turns 之前来回传递响应聊天消息(你也可以传递一个 stopping_condition,它接受当前轨迹并返回 True 或 False - 有关更多信息,请参阅 OpenEvals README)。返回值是构成对话轨迹的最终聊天消息列表。

有多种方法可以配置模拟用户,例如让它在模拟的前几轮返回固定响应,以及整个模拟。有关完整详细信息,请查看 OpenEvals README。

app 和 user 的响应交错:

恭喜!你刚刚运行了第一个多轮模拟。接下来,我们将介绍如何在 LangSmith 实验中运行它。

恭喜!你刚刚运行了第一个多轮模拟。接下来,我们将介绍如何在 LangSmith 实验中运行它。

在 LangSmith 实验中运行

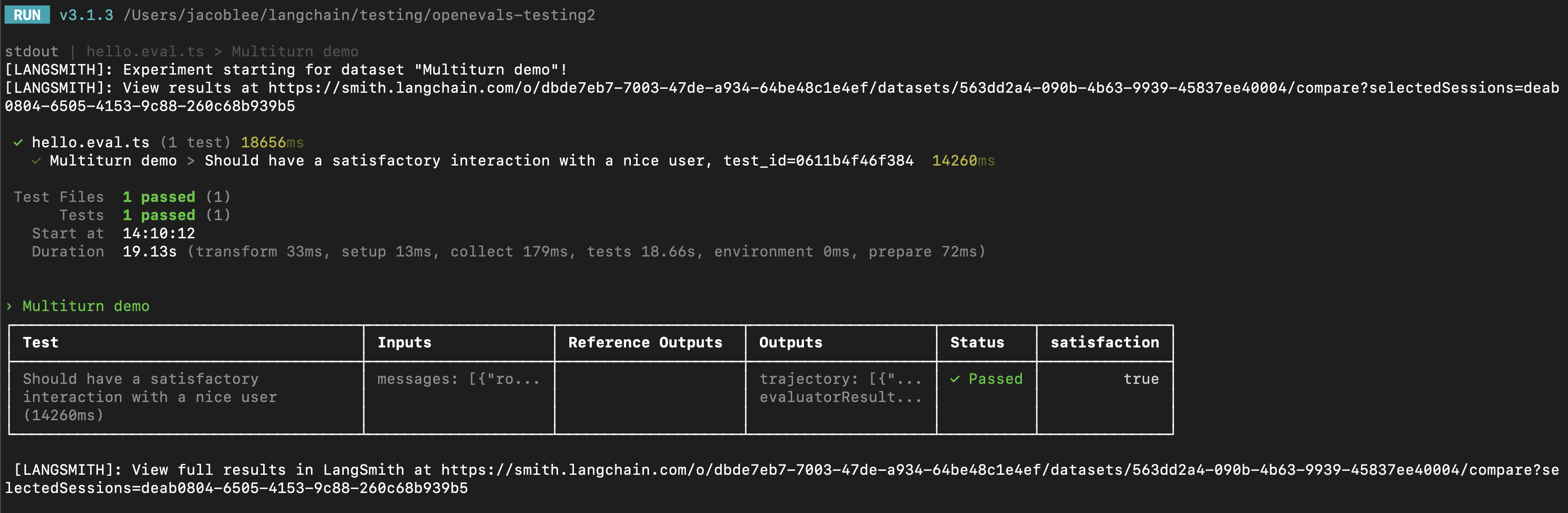

你可以将多轮模拟的结果用作 LangSmith 实验的一部分,以跟踪性能和随时间推移的进度。对于这些部分,熟悉 LangSmith 的pytest(仅限 Python)、Vitest/Jest(仅限 JS)或 evaluate 运行器中的至少一个会很有帮助。

使用 pytest 或 Vitest/Jest

请参阅以下指南,了解如何使用 LangSmith 与测试框架的集成来设置评估:

trajectory_evaluators 参数。这些评估器将在模拟结束时运行,将最终的聊天消息列表作为 outputs kwarg。因此,你传递的 trajectory_evaluator 必须接受此 kwarg。

以下是一个示例:

以下是一个示例:

trajectory_evaluators 返回的反馈,并将其添加到实验中。还要注意,测试用例使用模拟用户上的 fixed_responses 参数以特定输入开始对话,你可以记录它并将其作为存储数据集的一部分。

你可能还会发现将模拟用户的系统提示作为记录数据集的一部分也很方便。

使用 evaluate

你也可以使用 evaluate 运行器来评估模拟的多轮交互。这与 pytest/Vitest/Jest 示例在以下方面略有不同:

- 模拟应该是你的

target函数的一部分,你的目标函数应该返回最终轨迹。- 这将使轨迹成为 LangSmith 将传递给评估器的

outputs。

- 这将使轨迹成为 LangSmith 将传递给评估器的

- 不要使用

trajectory_evaluators参数,你应该将评估器作为参数传递给evaluate()方法。 - 你需要一个现有的输入数据集和(可选)参考轨迹。

修改模拟用户角色

上述示例对所有输入示例使用相同的模拟用户角色运行,该角色由传递给create_llm_simulated_user 的 system 参数定义。如果你想为数据集中的特定项目使用不同的角色,可以更新数据集示例以包含具有所需 system 提示的额外字段,然后在创建模拟用户时传递该字段,如下所示: