create_agent

在 LangChain 中构建智能体的新标准,取代

langgraph.prebuilt.create_react_agent。标准内容块

新的

content_blocks 属性,提供跨提供商统一访问现代 LLM 功能。简化的命名空间

langchain 命名空间已精简为专注于智能体的基本构建块,旧功能已移至 langchain-classic。create_agent

create_agent 是在 LangChain 1.0 中构建智能体的标准方式。它提供了比 langgraph.prebuilt.create_react_agent 更简单的接口,同时通过使用中间件提供更大的自定义潜力。

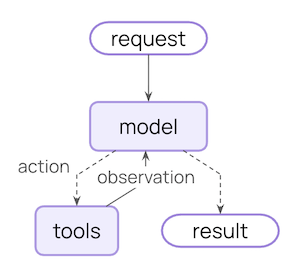

create_agent 建立在基本智能体循环之上 — 调用模型,让它选择要执行的工具,然后在不再调用工具时完成:

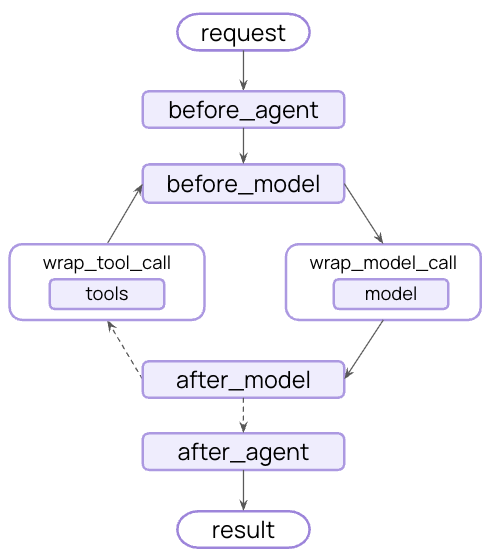

中间件

中间件是create_agent 的定义特性。它提供了高度可定制的入口点,提高了您可以构建的上限。

优秀的智能体需要上下文工程:在正确的时间将正确的信息提供给模型。中间件通过可组合的抽象帮助您控制动态提示、对话总结、选择性工具访问、状态管理和护栏。

预构建中间件

LangChain 为常见模式提供了一些预构建中间件,包括:PIIMiddleware:在发送给模型之前编辑敏感信息SummarizationMiddleware:当对话历史太长时进行压缩HumanInTheLoopMiddleware:为敏感工具调用要求批准

Custom middleware

You can also build custom middleware to fit your needs. Middleware exposes hooks at each step in an agent’s execution:

AgentMiddleware class:

| Hook | When it runs | Use cases |

|---|---|---|

before_agent | Before calling the agent | Load memory, validate input |

before_model | Before each LLM call | Update prompts, trim messages |

wrap_model_call | Around each LLM call | Intercept and modify requests/responses |

wrap_tool_call | Around each tool call | Intercept and modify tool execution |

after_model | After each LLM response | Validate output, apply guardrails |

after_agent | After agent completes | Save results, cleanup |

Built on LangGraph

Becausecreate_agent is built on LangGraph, you automatically get built in support for long running and reliable agents via:

Persistence

Conversations automatically persist across sessions with built-in checkpointing

Streaming

Stream tokens, tool calls, and reasoning traces in real-time

Human-in-the-loop

Pause agent execution for human approval before sensitive actions

Time travel

Rewind conversations to any point and explore alternate paths and prompts

Structured output

create_agent has improved structured output generation:

- Main loop integration: Structured output is now generated in the main loop instead of requiring an additional LLM call

- Structured output strategy: Models can choose between calling tools or using provider-side structured output generation

- Cost reduction: Eliminates extra expense from additional LLM calls

handle_errors parameter to ToolStrategy:

- Parsing errors: Model generates data that doesn’t match desired structure

- Multiple tool calls: Model generates 2+ tool calls for structured output schemas

Standard content blocks

Content block support is currently only available for the following integrations:Broader support for content blocks will be rolled out gradually across more providers.

content_blocks property introduces a standard representation for message content that works across providers:

Benefits

- Provider agnostic: Access reasoning traces, citations, built-in tools (web search, code interpreters, etc.), and other features using the same API regardless of provider

- Type safe: Full type hints for all content block types

- Backward compatible: Standard content can be loaded lazily, so there are no associated breaking changes

Simplified package

LangChain v1 streamlines thelangchain package namespace to focus on essential building blocks for agents. The refined namespace exposes the most useful and relevant functionality:

Namespace

| Module | What’s available | Notes |

|---|---|---|

langchain.agents | create_agent, AgentState | Core agent creation functionality |

langchain.messages | Message types, content blocks, trim_messages | Re-exported from @[langchain-core] |

langchain.tools | @tool, BaseTool, injection helpers | Re-exported from @[langchain-core] |

langchain.chat_models | init_chat_model, BaseChatModel | Unified model initialization |

langchain.embeddings | Embeddings, init_embeddings | Embedding models |

langchain-core for convenience, which gives you a focused API surface for building agents.

langchain-classic

Legacy functionality has moved to langchain-classic to keep the core packages lean and focused.

What’s in langchain-classic:

- Legacy chains and chain implementations

- Retrievers (e.g.

MultiQueryRetrieveror anything from the previouslangchain.retrieversmodule) - The indexing API

- The hub module (for managing prompts programmatically)

langchain-communityexports- Other deprecated functionality

langchain-classic:

Migration guide

See our migration guide for help updating your code to LangChain v1.Reporting issues

Please report any issues discovered with 1.0 on GitHub using the'v1' label.

Additional resources

LangChain 1.0

Read the announcement

Middleware Guide

Deep dive into middleware

Agents Documentation

Full agent documentation

Message Content

New content blocks API

Migration guide

How to migrate to LangChain v1

GitHub

Report issues or contribute

See also

- Versioning - Understanding version numbers

- Release policy - Detailed release policies